No need to panic, there's actually nothing broken. The problem is due to a default value in newer versions of OpenSearch. It seems that the developers have turned a blind eye to those of us who are perfectly happy running single node OpenSearch instances.

Requirements

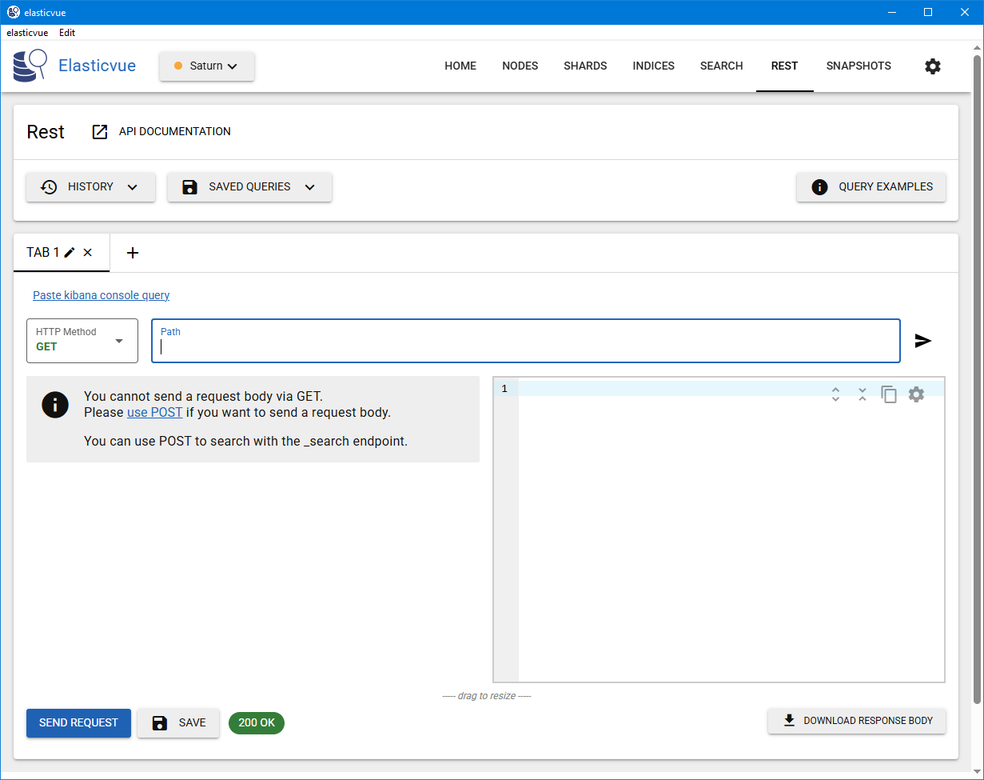

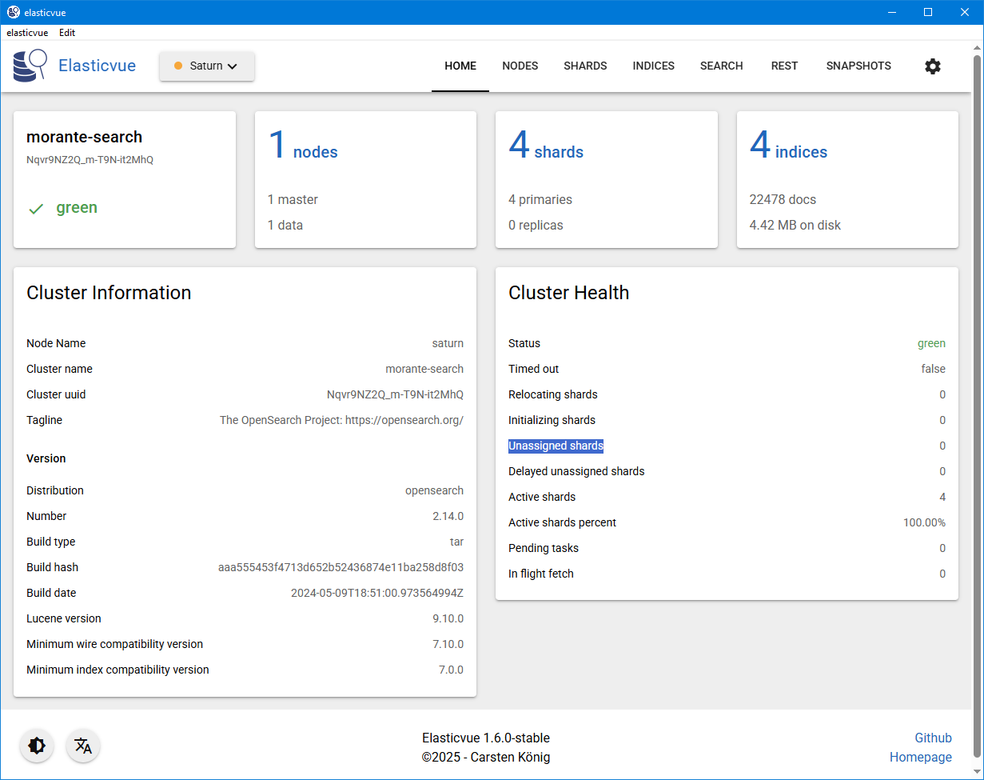

I highly recommend using a GUI tool to query REST APIs. The console can be a bit cumbersome and unpleasant to work with. I personally use an OpenSearch GUI client named "Elasticvue". Just search for the name, it's easy to find. They offer multiple ways of running. From this point forward I'll will use Elasticvue and provide a screenshot of what I am doing as well as the API endpoint you want to hit.

Identify the Problem

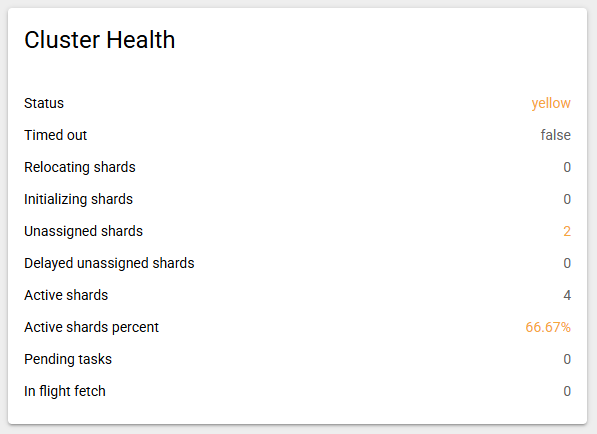

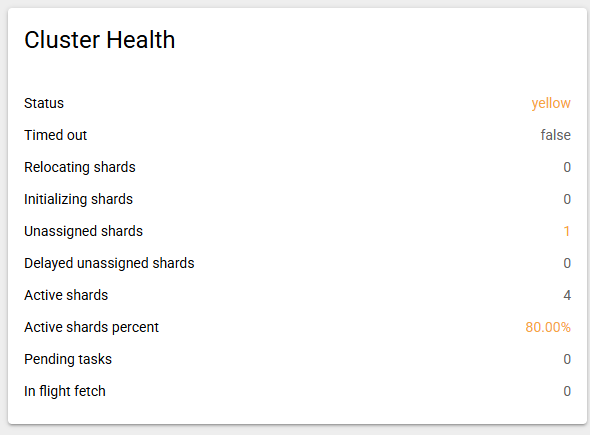

Connect to your (single node) cluster and take a look at the "Cluster Health" section. It will tell you how many unassigned shards there are. Usually for this specific issue it would be around 1 or 2, maybe 3.

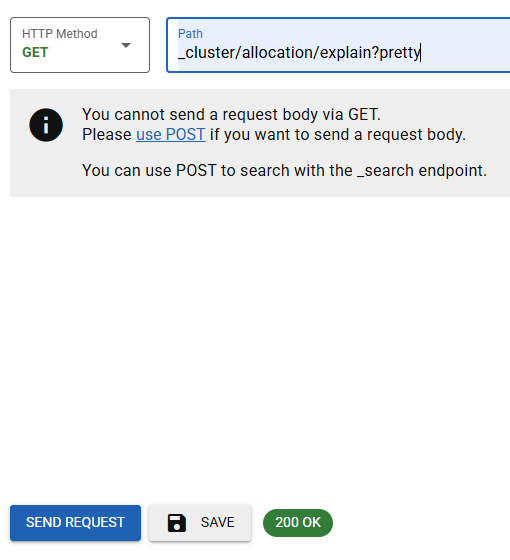

Go to the "REST" section and choose GET as the HTTP method.

In the "Path" box type in _cluster/allocation/explain?pretty and click on "SEND REQUEST"

The return result should resemble something like the example below, but the error should be exactly "cannot allocate because allocation is not permitted to any of the nodes".

{

"index": ".opensearch-sap-pre-packaged-rules-config",

"shard": 0,

"primary": false,

"current_state": "unassigned",

"unassigned_info": {

"reason": "CLUSTER_RECOVERED",

"at": "2024-10-13T04:26:04.572Z",

"last_allocation_status": "no_attempt"

},

"can_allocate": "no",

"allocate_explanation": "cannot allocate because allocation is not permitted to any of the nodes",

"node_allocation_decisions": [

{

"node_id": "PGUvqKaISbecpf-KUlFWNQ",

"node_name": "saturn",

"transport_address": "192.168.0.246:9300",

"node_attributes": {

"shard_indexing_pressure_enabled": "true"

},

"node_decision": "no",

"deciders": [

{

"decider": "same_shard",

"decision": "NO",

"explanation": "a copy of this shard is already allocated to this node [[.opensearch-sap-pre-packaged-rules-config][0], node[PGUvqKaISbecpf-KUlFWNQ], [P], s[STARTED], a[id=gCALtaocQNSWm2mMrbBWEw]]"

}

]

}

]

}The part that you are interested in is the name of the index. In the above example it's .opensearch-sap-pre-packaged-rules-config. The '.' in front of it means it's an internal hidden index that's specific to OpenSearch and not your application.

Fix the Problem

It's debatable if this is a fix or a workaround. The root cause is an upstream change where the default replica shards value is 1 when creating indexes. Since the index in question is an internal one used by OpenSearch, you or your app obviously couldn't have specified the replica value. The index was probably created automatically during an update that was applied. Since you only have a single node OpenSearch cluster, those replica shards have nowhere to go when the setting is configured to 1 or more.

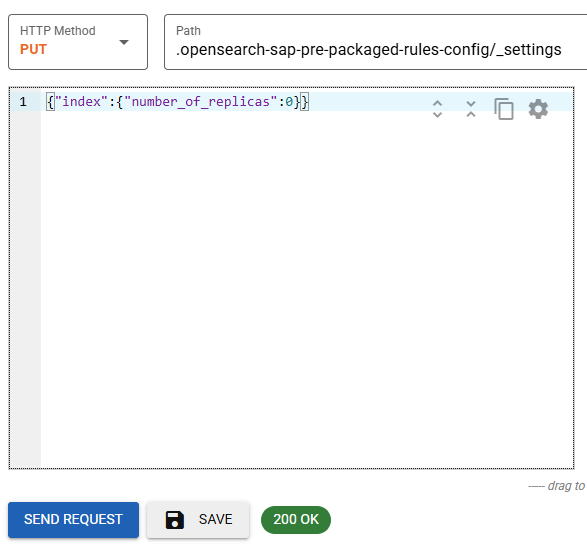

What we are going to do is change the value of replica shards for that index to 0, meaning, don't replicate it. Return to whatever tool you were using to make the REST queries.

Change the HTTP Method to "PUT" and enter .opensearch-sap-pre-packaged-rules-config/_settings as the path. Replace the index name if it's different for you (that's the value next to "index:" in the JSON result returned previously). For our example it was ".opensearch-sap-pre-packaged-rules-config". Remember the leading period '.' is important!

We must specify a payload for the request to actually change the "number_of_replicas" value to 0. Enter the payload below:

{"index":{"number_of_replicas":0}}You screen should look like the one below. Click "SEND REQUEST" to apply the change.

The response from OpenSearch should be:

{

"acknowledged": true

}Go back to the HOME screen in Elasticvue and look at the Cluster Health section to check the status (or what ever method or tool you are using). The Unassigned shards count should have gone down by one.

Repeat the same process to fix the remaining indices. In my case, there was only one other index remaining named .opensearch-sap-log-types-config:

{

"index": ".opensearch-sap-log-types-config",

"shard": 0,

"primary": false,

"current_state": "unassigned",

"unassigned_info": {

"reason": "CLUSTER_RECOVERED",

"at": "2024-10-13T04:26:04.572Z",

"last_allocation_status": "no_attempt"

},

"can_allocate": "no",

"allocate_explanation": "cannot allocate because allocation is not permitted to any of the nodes",

"node_allocation_decisions": [

{

"node_id": "PGUvqKaISbecpf-KUlFWNQ",

"node_name": "saturn",

"transport_address": "192.168.0.246:9300",

"node_attributes": {

"shard_indexing_pressure_enabled": "true"

},

"node_decision": "no",

"deciders": [

{

"decider": "same_shard",

"decision": "NO",

"explanation": "a copy of this shard is already allocated to this node [[.opensearch-sap-log-types-config][0], node[PGUvqKaISbecpf-KUlFWNQ], [P], s[STARTED], a[id=Tcby6wzFSpCTISIcuDnw-Q]]"

}

]

}

]

}The endpoint I used was:

.opensearch-sap-log-types-config/_settingsThe corresponding payload is the same as before:

{

"index": {

"number_of_replicas": 0

}

}The success result would be the same

{

"acknowledged": true

}Return to the heath screen and verify no more Unassigned shards present, and cluster status is "Green".

- Log in to post comments

.png)